I’ve had great conversations with research computing and data team managers this week. And while the the discussions were great, they weren’t all sunshine and light. RCT leads are facing some common struggles right now. We’re all wrestling with two big challenges: industry hiring like mad; and the likely end of a pandemic wave.

Industry’s sustained hiring spree means we all have to fight to hire and retain team members. Anyone with strong computational skills and a LinkedIn profile has to fend off requests for conversations about attractive positions. To hire and retain we need strong career ladders, which is something we can work on together. We need to find ways to build on the strengths of our teams and our work places, which is something where we can benefit from can sharing ideas.

And as another pandemic wave looks to subside, we face the opportunity and challenge of more in-office work. As was pretty clear even in 2020, “everything resets back to 2019” isn’t going to happen for most of our teams. On one hand, pure remote is out of reach for many. It may violate institutional or even funding requirements; and it doesn’t take full advantage of the real strength of being embedded in a research ecosystem. On the other hand, being expected to commute in every day like clockwork is going to rankle many team members, and the opportunity to hire from a broader geographic catchment area is too valuable for a lot of teams to forgo entirely.

So many of us are wrestling with what hybrid work arrangements will look like. Hybrid is going to be much harder than in-person or 100%-remote teams, each of which has pretty clear expectations. There’s mostly just one set of best practices for working on-site, and one set of emerging practices for 100% remote. Hybrid will be different. A whole spectrum of arrangements are “hybrid”; there’ll end up being a few clusters of good practices depending on what teams decide to aim for. Finding and disseminate what works for our very client-driven, interdisciplinary work and teams will take a lot of knowledge-sharing

Luckily, there’s wider recognition that to face these and other challenges, we need to improve sharing of ideas across research computing and data teams. Communities like this one are growing, as are sub-discipline groups like RSE societies. Grassroots conferences are starting to appear.

There’s starting to be institutional and funder awareness of the needs, too. I’m really pleased by this NSF call, “Research Coordination Networks: Fostering and Nurturing a Diverse Community of CI Professionals”. Recognition of the need for research cyber infrastructure professional communities broadly is really positive. I’d really like to see a proposal go in for a community of CI/RCT managers and leaders; there’s a limit to how well we can professionalize teams without there being professionalization of their managers and leads. If you or colleagues are thinking of putting such a proposal in and need a letter of support or if there’s any other way I or the readers here might help, please let me know.

Speaking of the newsletter - as a housekeeping matter, there are some changes in the roundup this week, and more will come.

First, under Systems and Emerging Technologies I’ve been covering way more of the “Feeds and Speeds” stuff that you can get from a million other places. That’s not what this newsletter should be about. I won’t be covering routine hardware updates there any more unless there is a startlingly new capability that’s beginning to be useable. This will have another advantage which I’ll talk about next week.

Secondly, no more routine research computing conferences in Events or Calls for Submissions. It was too much of a grab bag, and frankly not super interesting or helpful while nonetheless taking up a lot of room. If there’s a conference or event thak is so relevant that it’s worth calling out, I’ll put it in the corresponding section.

Any other changes you’d like to see? Anything you don’t want changed? What would make this newsletter more useful to you? Let me know - this newsletter is only good to the extent that it’s useful to you. Hit reply, or email me at jonathan@researchcomputingteams.org; I’m always happy to get feedback (especially about what improvements are needed!), or just chat.

And with that, on to the roundup…

The Void of Empowerment - Aviv Ben-Yosef

Ben-Yosef talks about the void managers and leaders of successful teams can feel in steady-state when, frankly, they don’t feel needed. Stuff is working well, there are relatively few fires to put out, and the Universe is unfolding as it should.

But that’s success. It gives options for growth, letting you take on new responsibilities while delegating work to team members for their growth.

One thing I really like about this article is a distinction it makes: working on the team vs working in the team. Working on the team via mentorship and coaching, or hiring or improving processes, should be the priority. Working in the team - putting out fires, rolling up the sleeves and helping with work - is unlikely to ever reach 0% of your time, but if you’re putting out the same kinds of fires or pitching in on the same kind of work routinely, that’s a problem that should be addressed.

Work Sample Tests - Jacob Kaplan-Moss

Technical interviews via Codespaces - Ian Olsen

One of the things we can do to make ourselves more successful hiring is having really clear goals for what we’re hiring for - what a successful hire would be doing day to day in the job. Then we can perform principled and transparent evaluations of the candidate’s ability to start doing those things.

In the first resource, Kaplan-Moss has fleshed out his series on what does and doesn’t make a good work sample test in technology. There are good introductory sections, including goals for a fair test (they should simulate real work, be no more than 3 hours work, be flexible with scheduling, provide as much choice as possible, use them to start discussions rather than be scored pass/fail; they shouldn’t be surprises, tested internally, and be late in the process). Then he talks about different options - coding homework, pair programming, bring your own code, reverse code review, and labs and simulation environments. There’s good concluding sections too, especially on what doesn’t work (including white-boarding code).

If you are going to provide one of these environments, new tooling makes it easier. In the second article, Olsen talks about using Github Codespaces for some of the work sample tests described above - certainly coding, pair-programming, code review, and labs. Obviously other cloud instances, or maybe even a vagrant VM, has some of the same environment; but Codespaces tight coupling with GitHub and VSCode makes it particularly useable if you use those tools at work.

(Relatedly, here’s a nice DevContainer template for doing ML work as an example.)

Don’t Hire a Former Employee Before Asking These Questions - Marlo Lyons, HBR

“Boomerang” employees are employees that come back after leaving, or in our case hiring trainees who have worked with us in the past. They bring a lot to the table. They already know the work; they know the people; and they themselves are known quantities. Hiring is incredibly uncertain, and the clarity of already knowing how a given employee will work out is not to be dismissed lightly.

But, Lyons tells us not to leap on the opportunity to hire boomerang employees just because it’s the easy thing. Maybe they are exactly what you need right now. But maybe what you need now and what you needed then are different. If they are what you need, then by all means hire them - their existing knowledge of the work and relationships with team members are invaluable, and they now bring important additional perspective! But be sure and ask the question first.

Hot Streaks in Your Career Don’t Happen by Accident - Derek Thompson, The Atlantic

In this article, Thompson reports on the work of Northwestern University economist Dashun Wang on hot streaks in people’s careers. The subhed of the article: “First explore. Then exploit.” gives a pretty good summary.

Hot streaks — streaks of successes — come, in Wang’s research, are “equally likely to happen among young, mid-career, and late-career artists and scientists”. Rhey come preferentially at times after the person has gone through an exploration/experimental stage, of trying different things, until they find success. Then the “hot streak” is a series of moves exploiting this new approach; as that winds down (due to lack of further opportunities to use the approach, or simple boredom), a new “explore” phase starts again.

This applies to us in science support just as much as scientists. As we try new strategies, either as a people manager, technical lead, or product lead, some of our experiments are going to be more successful than others. But if we’re not experimenting and trying new and different things, we’re not going to be able to have new hot streaks.

Research Software Engineering to reduce the environmental impact of a long-running script - Univ of Bristol Advanced Computing Research Centre

RSE Case Studies - N8CIR

In research computing, too much of the work we do goes unsung. This is a problem for a number of reasons - institutional decision makers don’t see the impact of our work, and other researchers we could be working with don’t have a good sense of what we do. As with the technical areas of research computing, documentation is key!

In the first article, U Bristol describes in detail the 25,000x speedup of a perl script to find a minimal set of genetic markers from a genotyping dataset. It’s a nice long-form writeup which gives insight into how U Bristol team members work, what working with them looks like, how long a project takes, and the benefits other than just speedup that accrue to the researchers.

The N8 Centre of Excellence in Computationally Intensive Research (N8 CIR) has been writing up case studies of larger-scale projects for a while, in concise accessible slide format, broken down by subject area. While the shorter form writeup doesn’t offer the same “day in the life” description of how works, the collection of 15 of them gives an overview of the kinds of projects this team can take on. The PDFs of the slides are also very attractive and professional looking, at the cost of having to download and open a PDF.

Would one of these approaches work well for advertising your team’s work? Could regular communications with your clients be repurposed and be distilled into publicly-viewable case studies?

Petabase-scale sequence alignment catalyses viral discovery - Edgar et al., Nature

Scientists discover nine new coronaviruses in 11 days using AWS - Amazon

This is a pretty cool project because it builds on research data infrastructure that’s been being built up since 2008 (the Sequence Read Archive, or SRA), previous software development effort by another group. It was also supported by a new kind of infrastructure, the “cloud innovation centre” type teams that have been funded at universities around the world by all the cloud providers, to solve a very topical problem.

The SRA is a trove of huge amounts of sequencing data. The key to this project is that the datasets deposited into SRA are usually aiming to sequence one kind of organism, but lots of other DNA and RNA gets into the samples. In particular, viruses infecting the organism, or just from the environment, get included in the sequencing, and thus in the sequencing results.

The team leading this work built their own cloud infrastructure, Serratus, and took advantage of previous work by Fred Hutch for plowing through the tens of petabytes of SRA data already hosted by each of the cloud providers. In this work, they then looked for sequences continuing genes producing RNA-dependent RNA polymerase (RdRP), a key protein in RNA virus replication. Based that search, they found all the RNA viruses in the sequence and classified them by family; in doing so they discovered nine novel (and harmless) viruses in the coronavirus family, along with countless other unknown viruses, helping build out the family trees of these pathogens. All the data is available on their website.

This is the first big science result I’ve seen coming out of the cloud innovation centres - this one being one at University of British Columbia jointly with AWS. I’ll be curious to see if it’s one a one-off or if more are coming.

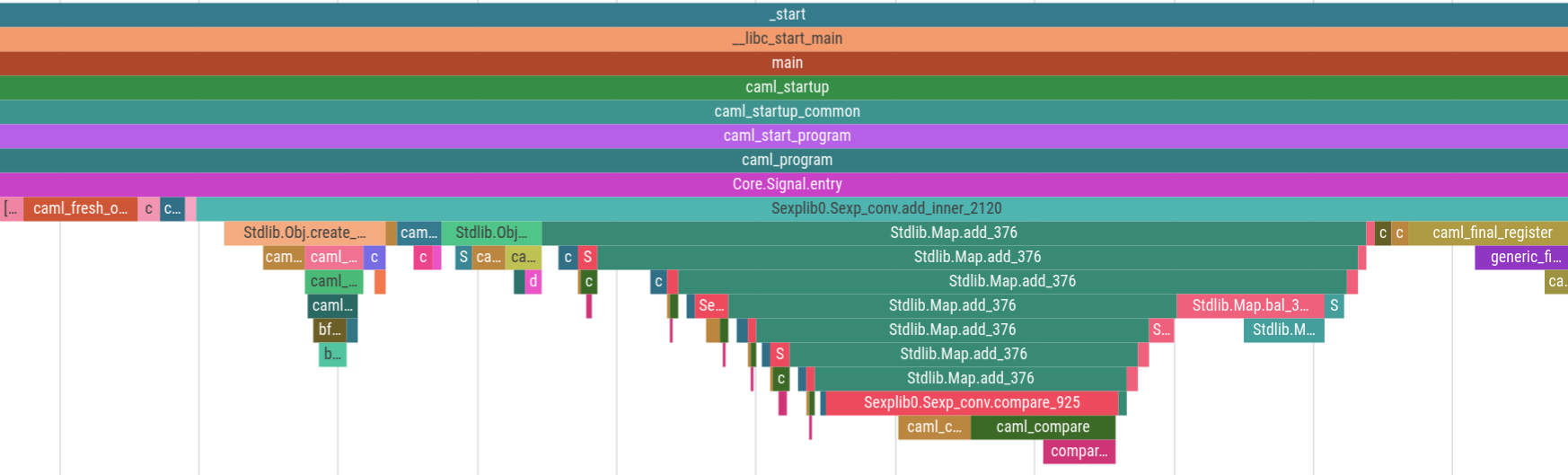

Magic-trace: Diagnosing tricky performance issues easily with Intel Processor Trace - Tristan Hume, Jane Street Blog

Interesting single-node tracing tool using Intel Processor Trace. Hume writes about magic-trace, an open-source package that lets you instrument a function, connect to a process, and have it dump out a trace (in a format compatible with the Google Perfetto trace visualization tool) of the function and the 10ms before and after the function call. Cute new performance tool to add to the arsenal.

HPC ReproHack @ Warwick - 21-31 March, on site in Warwick, UK time

SEA’S Improving Scientific Software Conference and Tutorials - 4-8 Apr, Abstracts due 31 Jan

A couple of very relevant research software development events.

The first is an HPC-targeted hackathon session, with support from RSEs, to reproduce the results of particular papers. Huge improvements have been made in reproducibility of HPC work in recent years, but it’s still much harder (for a bunch of good reasons) than desktop-scale computational work, so it’s interesting to see people trying to push this forward.

The second is a workshop at UCAR on improving scientific software in general - the demands of modern architectures, tools for data analysis and scientific workflows, best practices for software development, and more.

Scientific Python Guiding Design Principles - Scientific Python Cookiecutter Team There’s No Such Thing as Clean Code - Steve Barnegren

The first article is some simple design principles for beginning scientific python. Modestly useful but I really liked this piece of advice for some kinds of code:

Prefer “Wide” over “Deep”: It should be possible to reuse pieces of software in a way not anticipated by the original author. That is, branching out from the initial use case should enable unplanned functionality without a massive increase in complexity.

Relatedly, in the second article Barnegren urges us to realize “clean” doesn’t mean anything when it comes to code. It’s a catch-all feel-good term.

There’s lots of positive things code could be - readable/understandable (even there: by who? Another RSE? A scientist-user?), performant, extensible, robust, reusable…. but those positive things, like the strategies and priorities I talked about last week, sometimes conflict with each other. Code that’s robust (with lots of checks and constraints) could easily be less extensible. Performance often comes into conflict with readability.

In your onboarding and code review process you have the opportunity to prioritize one or two of these as being the top choices for your team, or maybe for particular projects. But again, you need to choose. “Clean” is a shape-shifting term that avoids that choice, which in turn makes it harder for you to optimize for what matters.

How to efficiently load data to memory - Jorge C. Leitão and QP Hou

As data gets bigger, tasks that seemed trivial like reading data from disk suddenly becomes a bottleneck. Here Leitão and Hou talk about the steps - the fact that more CPU is required than someone new might assume, plus the I/O requirements, and what it takes to somewhat decouple CPU from I/O, or trade off the less constrained resource for the others.

Document Intelligence: The art of PDF information extraction - Anurag Bejju, Statistics Canada

Canada’s statistics agency, StatsCan, has to deal with extracting tabular data from a lot of PDFs. In this article, Bejju talks about the current routine state of the art and challenges of doing such work, and reviews five python packages for doing so (spoiler: the recommendation is for Camelot).

Then he introduces the algorithms they’ve developed in house using packages like Camelot plus computer vision. Happily, government departments are starting to open-source more of their work, and they’ve released SLICEmyPDF under an MIT license.

The Synthetic Data Vault - Carles Sala, Felipe Alex Hofmann, Kevin Zhang, Plamen Valentinov Kolev

There are increasing number of tools for creating realistic synthetic data from real data sets. SDV (the Synthetic Data Vault) seems like a particularly well-put-together and easy to use tool for a variety of use cases, from synthetic data release to testing.

PwnKit: Local Privilege Escalation Vulnerability Discovered in polkit’s pkexec (CVE-2021-4034) - Bharat Jogi

Hopefully this isn’t the first time you’re hearing about this, because if it is and your team runs a system you’re going to have a really crummy weekend. This is an embarrassingly easy to exploit escalation-of-privilege, pop-a-root-shell vulnerability. Oh, and it’s been there since May 2009. Strip the suid bit off of pkexec and then update.

ASCR Workshop on the Management and Storage of Scientific Data: Accepted Position Papers - DOE ASCR

I’m still going through the responses to DOE’s call for stewardship of scientific software reported in #106; looks like I have some more homework to do, dozens of position papers on the storage and management of scientific data for HPC. There are some interesting position papers here by people who very much know there stuff. If you’re interested in HPC storage at all, browse around in here.

FOSDEM’22 HPC, Big Data, and Data Science devroom - 5-6 Feb

This year’s FOSDEM conference is again free and virtual. Interesting talks in the HPC track include containers in HPC, HPC in K8s or OpenStack, HPC for Social & Crime science and for Cytometry, tuning AMD GPUs, and more.

FOSDEM’22 Containers Devroom - 6 Feb

FOSDEM’22 CI/CD Devroom - 6 Feb

Several FOSDEM sessions of likely interest here, particularly around containers and CI/CD, but other tracks might be of interest too.

Howie: The Post-Incident Guide - Jeli How to Write Meaningful Retrospectives - Emily Arnott, Blameless

The key to getting better, individually or as a team, is to pay attention to how things go, and continue doing the things that lead to good results, while changing things that lead to bad results.

Pretty simple, right? And yet we really don’t like to do this.

Whether your teams run systems, develop software, curate data resources, or combinations of the three, sometimes things are going to go really badly, in a way that affects researchers. That is always going to happen. The key to reducing the frequency and severity of those bad outcomes while continuing to build trust with researchers is to learn from what happened (post-incident analysis) and communicate to the user community (sending out retrospectives).

In the first article, the Jeli team recommends running the post-incident analysis by:

It’s a good long read going into each of those steps in great detail.

In the second article, Arnott focusses especially on the writing and communication to stakeholders. Your stakeholders are researchers - they’re smart, they know things go wrong on the cutting edge sometimes, and they have extremely finely-tuned BS detectors. They deserve to know what’s gone wrong and what you’re doing to reduce the chances of that going wrong again.

How Bad is QWERTY, Really? - it’s not as much slower as everyone thinks, there also maybe aren’t as big RSI consequences as people worried, but it maybe is also easier to switch away from than you might have thought?

Why build a web app when you could build an ssh app with a really cool CLI/TUI? Really not sure if charm.sh is genius or madness.

When generics finally make it into the golang stdlib, it may make things significantly faster.

Recommendations for modern bash/zsh scripting practices.

What do you call something that’s like a playground, but instead of fun it’s for anguish and despair? Anyway, here’s a systemd playground for learning systemd.

Missed out on the OldenDays of IBM System 360? Here’s a 360/50 Simulator.

Or you could get online with an Atari 800.

Ever wanted to store entire databases within the individual entries of a key-value store, at the edge of a CDN? Store SQLite in Cloudflare Durable Objects.

The Big Time Public License v 2.0.0, a software license that’s free for noncommercial and small-business use.

Good deep dive into the performance of AMD’s 3D stacked V-Cache.

The ins and outs of LTO Tape data storage for Linux nerds.

Earn swag by fixing security vulnerabilities.

Running gromacs in the cloud, in both “hero mode” large HPC calculations and with large numbers of small high-throughput calculations. With checkpoint-restart, spot instances, and judicious choice of instance types, they find that the costs are comparable between cloud and on-prem. With scripts and templates, and extremely detailed performance breakdowns.

And that’s it for another week. Let me know what you thought, or if you have anything you’d like to share about the newsletter or management. Just email me or reply to this newsletter if you get it in your inbox.

Have a great weekend, and good luck in the coming week with your research computing team,

Jonathan

Research computing - the intertwined streams of software development, systems, data management and analysis - is much more than technology. It’s teams, it’s communities, it’s product management - it’s people. It’s also one of the most important ways we can be supporting science, scholarship, and R&D today.

So research computing teams are too important to research to be managed poorly. But no one teaches us how to be effective managers and leaders in academia. We have an advantage, though - working in research collaborations have taught us the advanced management skills, but not the basics.

This newsletter focusses on providing new and experienced research computing and data managers the tools they need to be good managers without the stress, and to help their teams achieve great results and grow their careers.