Thanks, everyone, for your responses last week.

I’ve been thinking a lot about strategy in other contexts lately - some of you will have noticed that I’ve been back on my nonsense on twitter, about the importance of having a focus. The very insightful comments and suggestions you sent last week about how we can help more research computing teams were very on point, and I think combine to a feasible strategy.

Because within this newsletter community we’ve built together, we have a number of strengths:

And the wide underserved community of reseach computing and data team managers, technical leads, and those thinking of becoming one, could really use our help.

For areas to focus on, readers mentioned the lack of resources available for new managers, especially those put in the hard position of managing yesterday’s peers; lack of ongoing mentorship; and near total absence of people talking about management in a way that sounds relatable to research computing professionals.

There was wide willingness to contribute:

And some suggestions for things we could contribute together that would be valuable:

So if we have a goal of helping other research computing teams and their managers by providing material and mentorship relevant to them, with priorities around building resources that others can easily use and the tools for others to reach out to local communities, I think the next step is to start making a plan of action.

I’ll be speaking with some people in the coming weeks trying to put together some plans. If this sounds like somethign that would be of interest to you, as an observer or a active participant, or can think of other ways this community could help other research computing teams, jmail me - hit reply or email jonathan@researchcomputingteams.org - or arrange a fifteen minute call with me. Either way, I’d love to hear from you.

And now, the roundup!

4 Practical Steps For Strategic Planning As A Leader - Anthony Boyd

One hard things for new leaders to really come to terms with is that they typically have a lot more freedom in what their team does and how their team does it than might be comfortable.

Defining a strategy for how to do whatever your team is charged with doing is a big responsibility. And it’s a lot scarier than staying focused on the day-to-day of routine work. As a result, I see lots of teams or organizations with no discernible strategy whatsoever, muddling along with whatever tasks come their way - or, maybe worse, following through with zealous dedication on some idea that three years ago someone said seemed like a good idea.

So I keep an eye out for resources on defining strategies. Lots of them are way too “big” - focused on enormous organizations. Or they’re hyper-focused on things like SWOT Analyses or Business Model Canvases or Wardley Maps - which are all, you know, great, but they’re just devices to nudge thoughts and discussion into directions that might be fruitful. A 2x2 matrix or canvas or map isn’t a strategy. A concrete set of goals and priorities, informed by the context of the team and the environment in which it operates, that’s a strategy, that’s something that can help guide the routine day-to-day decisions you and team members make, and serve as a nudging guardrail to make sure you and your team are moving in the right direction.

In this article, Boyd describes a very down-to-earth process for defining and following through on a strategy, that he learned and developed during his time as a union leader. It’s simple and pedestrian and it’s all that strategy is - routinely spending some time thinking of the big picture and its context, so that the day-to-day work is steered properly.

Boyd’s steps (followed by a course correction step) are:

This can be done by yourself or with the team, and involving your manager; or you can get feedback from your manager before striking off in a direction.

For brainstorming, whether by yourself or with others, the steps laid out in another article I saw this week, Shopify’s Brainstorming Session Template, can help - in particular the iterative approach of brainstorm, assess, brainstorm …. distill. And yet another article I saw, more for large organizations, about running a strategy offsite for a leadership team, is worth skimming if only to see that it’s the same as Boyd’s steps, but done in a group. One line I like from that last article - “Choose clarity over certainty”.

How to break out of the thread of doom - Tanya Reilly, LeadDev

5 situations when synchronous communication is a must - Hiba Amin, Hypercontext

We’re all spending a lot more time in written communication than we were before, and there are huge advantages! But there are some common failure modes, including having interminable conversations that don’t actually result in some conclusion. Reilly has three hints for winding up those discussions:

Speaking of that last point, Amin talks about some situations where you shouldn’t even try to communicate asynchronously if you can avoid it - they’re all areas where building relationships is the goal, or higher-bandwidth-than-just-text communications are necessary:

How to Plan Your Ideal Hybrid Work Schedule (So You Can Live Your Best Life) - Regina Borsellino

We’ve talked a lot about the challenges of arranging hybrid work for your team members, but you’re a team member too. What will work best for you?

Borsellino’s article goes into much more depth than most I’ve seen, walking you through 37 questions to help you make some decisions, under categories of:

There’s too much to summarize here, but if you haven’t yet started working through your work plans and they might be hybrid, this is a good starting point.

Because of an immune condition, I’m going to be way on the late side of coming back into the office. I like the idea of coming in 1-2 days a week, or maybe 3 days a week but just the afternoons (say) - and spending the rest of time either working from home, or some coffee shops in the neighbourhood. With our team distributed across the country, I can meet my managerial obligations that way comfortably, and it will give me a nice blend of productivity and peace of mind. The exact schedule, well, we’ll have to experiment a bit and see. What are your current plans - and what would your ideal plans be?

Minimum Viable Governance: lightweight community structure to grow your FOSS project - Justin Colannino

Growing a community around an open source research software effort to the point that there are external maintainers is a sign of huge success - but it makes things way more complicated. It’s a pain to be the sole maintainer, but at least there’s clarity in decision making.

Here Colannino describes the “Minimum Viable Governance” (MVG) set of template documents for bootstrapping a real open source governance framework. Some areas - trademark, and antitrust policy(!) - matter less for research computing, but decision making, project charter, code of conduct, and guidelines for contributing are all very relevant. This is a good starting point as is, or for thinking through the issues.

Intel C/C++ compilers complete adoption of LLVM - James R Reinders, Intel Software Blog

I didn’t even know this was in the works - the new Intel C/C++ compilers in the oneAPI suite are now based on LLVM. They’ve changed the name, (icx) so that there’s no confusion and you can have the classic and new compilers installed easily. This should mean that a lot of tooling becomes available, and development moves more quickly - and it looks like compilation time has significantly improved, and performance on some benchmarks are also better. Unfortunately it doesn’t sound like intel contributes all their LLVM work upstream.

Fortran is already in progress.

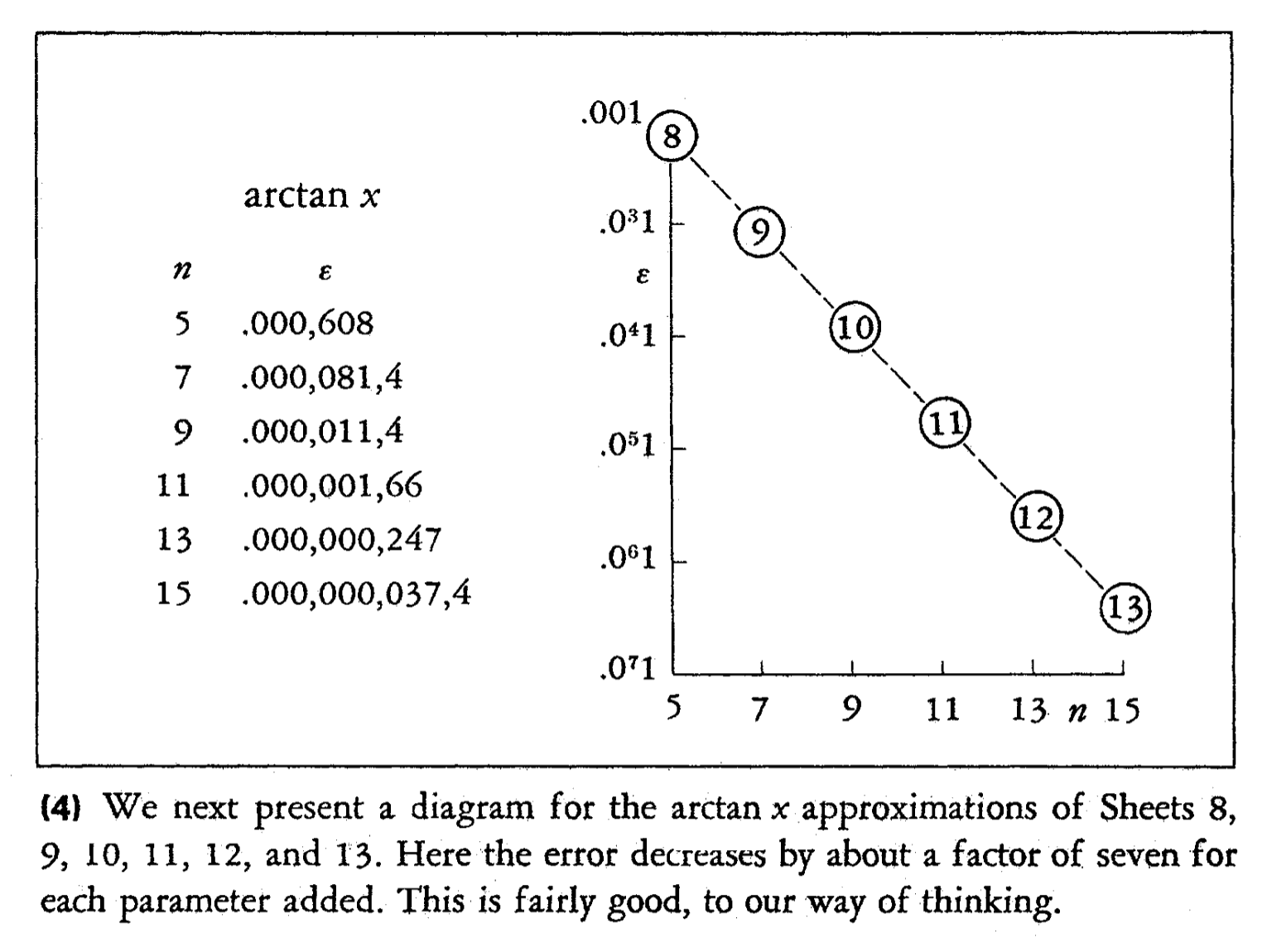

Speeding up atan2f by 50x - Francesco Mazzo

The standard glibc atan2f is well optimized, but the authors didn’t need the full of accuracy of atan2 for his application, didn’t need special cases like Inf handled, and did need large batches of operands calculated on at once. Mazzo’s article goes from defining the approximation he used (including how tuning parameters to minimize maximum error), then vectorization, minimizing type conversion, avoiding NaN-handling (he just wants them to propagate), taking advantage of fused multiply-adds, and finally unrolling to manually vectorize.

How to write fast Rust Code - Renato Athaydes

Rust is gaining some research software development adherents for new projects that otherwise might be written in something like C++. Here Athaydes walks us through some gotchas that will be familiar to those of us who have had to write performant code in the past, but with some extra twists due to Rust’s approach to memory:

Athaydes then points to some tools to help, such as a microbenchmarking tool criterion.rs, and a flamegraph tool for profiling, and walks through some cases of using those.

It’s Time to Retire the CSV - Alex Rasmussen

Despite this ubiquity and ease of access, CSV is a wretched way to exchange data. The CSV format itself is notoriously inconsistent, with myriad competing and mutually-exclusive formats that often coexist within a single dataset (or, if you’re particularly unlucky, a single file). Exporting a dataset as a CSV robs it of a wealth of metadata that is very hard for the reader to reconstruct accurately, and many programs’ naïve CSV parsers ignore the metadata reconstruction problem entirely as a result. In practice, CSV’s human-readability is more of a liability than an asset.

Hear, hear! CSV - and, I’d argue, other human-readable text formats - were a costly mistake. Rasmussen itemizes some of the problems - absent metadata, no typing, poor precision - I’d add surprisingly slow to generate (serializing floating point to text is a shockingly time consuming operation!). Rasmussen suggests Avro, parquet, arrow, HDF5, sqlite, even XML - almost anything else, really.

CSVs are especially bad because they’re used to exchange arbitrary data, but I’d continue the point and say that in research computing we use text-based file formats, instead of carefully defining APIs and allowing a number of back-end storage representations - way too much. (I’m looking at you, bioinformatics, with your “tab-separated files are a way of life” nonchalance).

Via a response, I see that W3C had a multi-year effort to try to define standards around CSVs, and, well, it ended in 2016 and this is the first time you’ve heard of it, isn’t it.

AMD Infinity Hub - AMD

Something NVIDIA’s always done really well is to have a place to go to get information on and distributions of CUDA-powered applications. AMD is belatedly catching on; new to me is this page for AMD-powered tools with ROCm, their software stack (including OpenCL and HIP for programming, and libraries based on top of that.). It includes information and links to container images that it looks like AMD maintains.

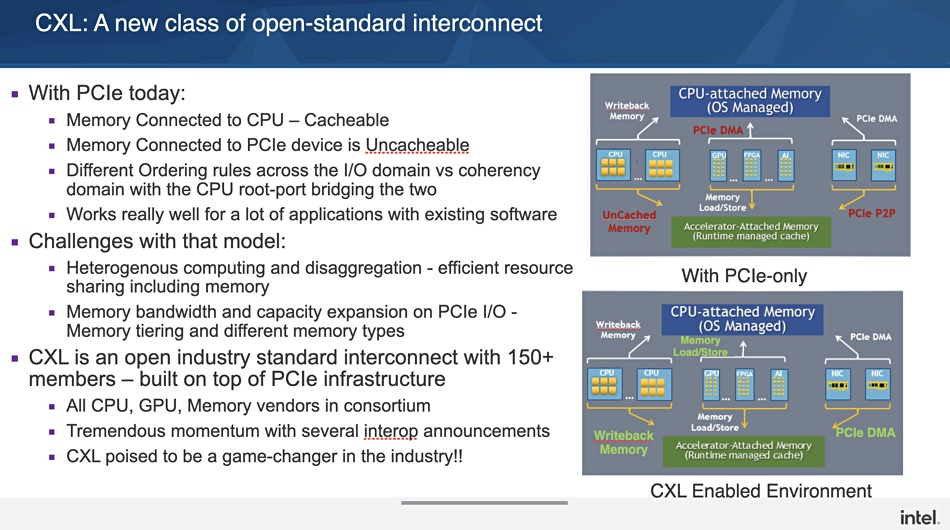

Intel Finally Gets Chiplet Religion With Server Chips - Timothy Prickett Morgan, The Next Platform With AMX, Intel Adds AI/ML Sparkle to Saphire Rapids - Nicole Hemsoth, The Next Platform Intel sees CXL as rack-level disaggregator with Optane connectivity - Chris Mellor, Blocks and Files

In the first two articles, Morgan and Hemsoth give new architecture announcement from Intel their usual well-researched coverage.

Morgan overs the big picture, the architectural changes coming for Xeon CPUs (“Sapphire Rapids”) and HPC GPUs (“Ponte Vecchio”). Sapphire Rapids will be based on chiplet “P (performance)-cores” with cores, accelerators, memory controllers, cache, I/O resources, etc., and Embedded Multi-die Interconnect Bridge (EMIB) links between them tying the whole die together, including access to high-bandwidth memory.

Hemsoth digs into “AMX”, Advanced Matrix Extensions, aimed specifically for AI/ML but which will be useful for more traditional technical computing applications as well. As with ARM’s Scalable Matrix Extension (SME) announcement (#84), this will support small tiles of matrices, but it seems at a quick read to be more fully featured, including for instance a matrix tile add-multiply (C+=A*C). Hemsoth says:

The best way for now to think of AMX is that it’s a matrix math overlay for the AVX-512 vector math units.

In the third article, Mellor summarizes Intel’s presentation at IEEE Hot Interconnects, covering their off-chip plans, involving Compute Express Link (CXL). From Mellor, “CXL is based on the coming PCIe Gen-5.0 bus standard to interconnect processors and fast, low-latency peripheral devices.” As with the origins of Infiniband, the plan is to extend on-node fast access to data and peripherals off-node, providing (so the plan goes) a cluster-wide memory and (with persistent memory like Octane) storage tier. A slide from the deck outlining the plan (courtesy the Blocks and Files post) is below.

Is GitHub Actions Suitable for Running Benchmarks? - Jamie Rodríguez-Guerra, Quantsight Labs

As Betteridge’s Law would suggest, the answer to the headline question is no, but it’s closer than I would have expected.

Rodríguez-Guerra walks us through trying GitHub Actions CI workflows as benchmarking, for a large number of replications of scikit-image benchmarks. The results are much less all-over-the-place than I would have expected - the standard deviation is 5% - but unless the kinds of per-commit changes you make are routinely expected to cause performance changes »5% that’s probably just too noisy.

So this probably isn’t good news for benchmarking (although in fairness it might be useful in early stages of optimizing code when you really are going for substantial improvements) but it does suggest a pretty decent reliability in terms of run times for large Actions, which is interesting to know about.

Workshop on Integrating High-Performance and Quantum Computing - 18-22 Oct, Virtual, Abstracts due 10 Sept

Held as part of IEEE Quantum IEEE Quantum Week, this workshop inviets “high-quality, abstract submissions on all topics related to integrating QC into the existing binary computing eco-System, with a particular focus on combining HPC and QC. “ Topics include everything from data centres to programming models:

Webinar: Get OpenMP Tasks to Do Your Work - 5pm UTC 22 Sept

A one-hour introductory webinar covering OpenMP tasks.

Pan-Structural Variation hackathon in the Cloud Hackathon - 10-13 Oct

DNANexus and Baylor College of Medicine’s human genome sequencing centre is hosting a 4-day hackathon to build pipelines to analyze large datasets in a cloud infrastructure.

Cloud Learning and Skills Sessions (CLASS) Fall 2021 Advanced Cohort - 12 Oct - 27 Jan, $4,000 - $5350

This is interesting - training aimed squarely at our team members:

Cloud Learning and Skills Sessions is a program that provides research computing and data professionals (those who support researchers) the necessary training to effectively leverage cloud platforms for research workflows. A combination of vendor-neutral guidance across cloud providers, and training on the tools and technologies supported by public cloud providers, allows a broad range of research use-cases to more effectively use these important resources. Participants join the CLASS community of practice where they can share information and lessons learned.

It’s not cheap but it looks like it covers a wide range of cloud technologies, deploying real applications on them, and having someone review your architecture.

Windows users have long had Python Launcher for keeping track of pythons installed on the system; now there’s a python launcher for unix.

Want the code in your IDE to look kind of like (to my eye) the default TeX serifed fonts, computer modern roman? Try New Heterodox Mono, I guess.

Make your GitHub actions (many of which run in Ubuntu containers) faster by turning off initramfs and man db updates, amongst other things.

With GitHub no longer using passwords from the command line, now might be a good time to use security keys. I’ve been using a Yubikey 5 for a while and it works fine.

Twitter thread describing a number of use cases of the linux perf command, with examples.

Cryptography is hard. Here’s a number of common gotchas.

Getting started with tmux.

Write shell scripts in javascript with zx.

As longtime readers know, I think constraint solvers, theorem provers, and a host of math-y tools are woefully underused in research computing. Here’s an absurdly easy to use wrapper for z3 in python.

A good quick overview on B-trees and a few of the optimizations necessary to make them actually perform well for database-type applications.